Introduction

We recently published an App in the Atlassian Marketplace and saw that there is a statistics REST API available. That raised the question “What has it got in its pocketses?”. We wanted to get a hold of all this statistics data and display it in our own KPI dashboard.

Since our infrastructure is fully Kubernetes driven we were looking for a dockerized KPI Dashboard utility and found Metabase which is just super awesome. Metabase will connect to your database and you can create custom queries that let you display charts and dashboards. The main idea behind Metabase is, that anyone in a company can “ask for data”, will be able to receive it easily and can learn from it.

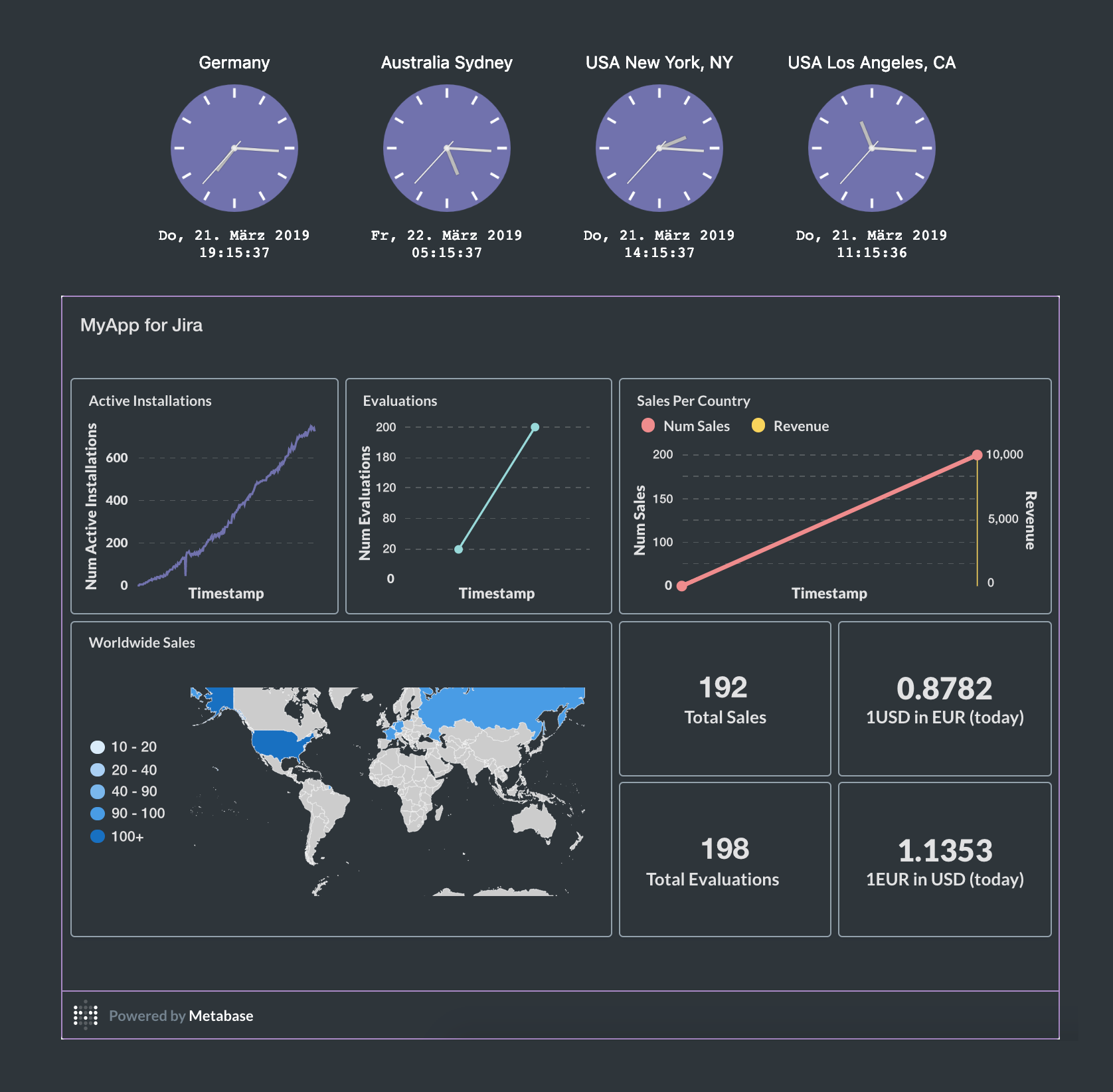

After two days of fiddeling around and writing sync scripts for a number of things I ended up with this super nice dashboard. Metabase let’s you embed your Dashboards on external sites. I created a static website and added some world-time clocks on top from timeanddate.com and below the Metabase dashboard widget. The dashboard fits perfectly on any iPad at best with the FullScreen Browser app.

Since all the topics I could cover in this BlogPost would fill bookshelfs, I decided to focus on the USD to EUR rates and how I sync them with data from the European Central Bank on a daily basis. For the sync scripts I use NodeJS and deploy them serverlessly with fission.io, which is a Kubernetes powered serverless framework.

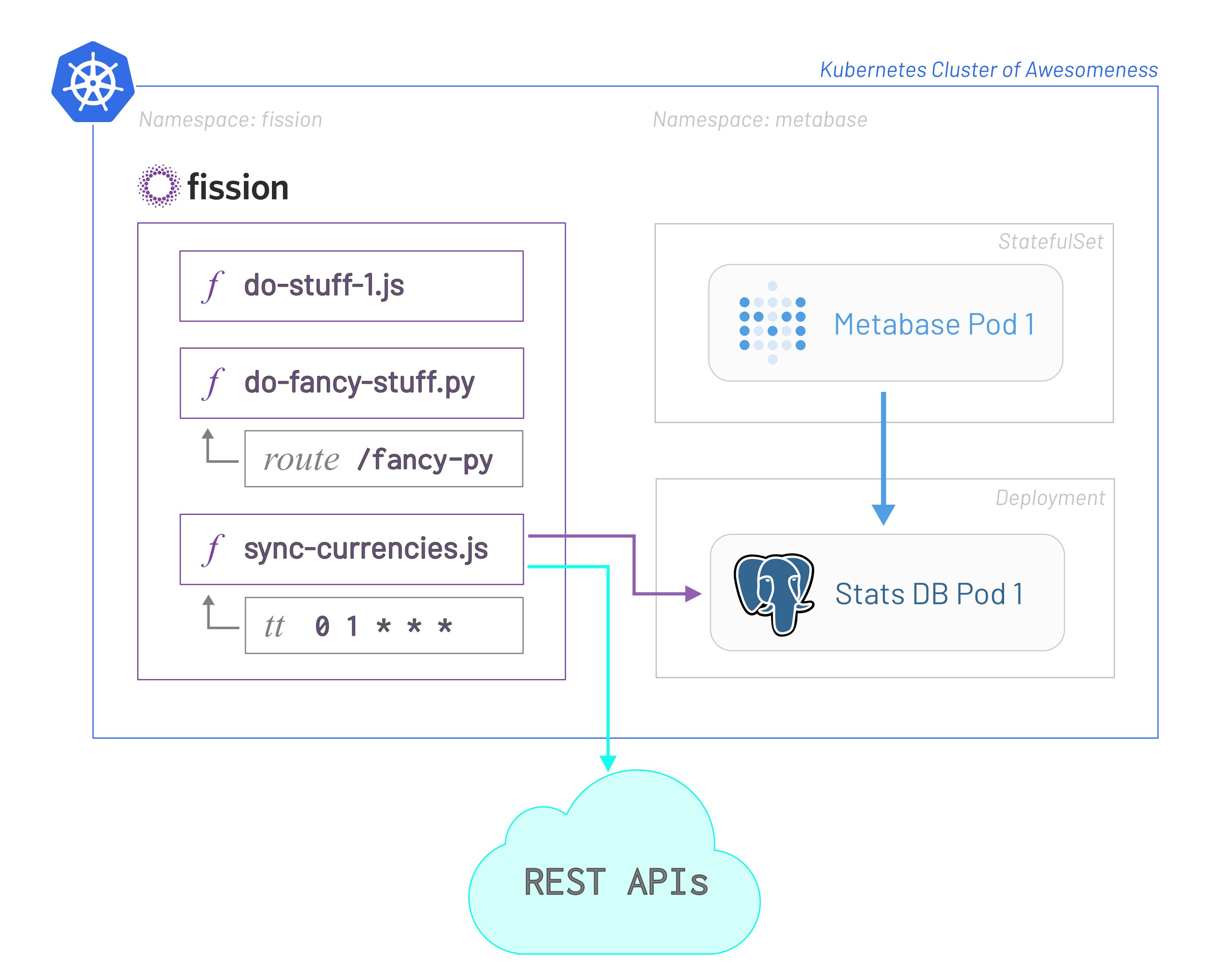

As the big picture above shows, we have our running kubernetes cluster that runs the serverless framework fission.io. We deployed metabase to its own k8s namespace and expose it via a service and NodePort since this is a bare-metal cluster with single master (the poor mans cluster :D ). We also deployed a postgresql database called stats into the metabase namespace. From our serverless sync scripts we write directly into the stats database. The sync scripts call different APIs, transform data, write to stats db and wait to be triggered again.

Installing Metabase in our Kubernetes Cluster

This part will mostly be just copy-paste instructions, if you plan to install these tools yourself.

Since we run everything on a bare metal cluster with some nodes we specify node affinity via ‘nodeName: tibatong’ where needed, to force Pods to be started on a specific node. First we need to setup the hostPaths which will be used for persistent data (you can also use VolumeClaims if you like).

All my containers run with uid=10777(dockerworker) gid=10777(dockerworker) and that is why we create the persistent data path like so:

shell:mkdir /opt/k8s-host-path-volumes/metabase && chown dockerworker:dockerworker /opt/k8s-host-path-volumes/metabase

Now we create the Namespace.

shell:kubectl create namespace metabase

The metabase container itself starts as root internally and will then switch to the uid and gid we specify via Env variables (MUID=10777,MGID=10777). You will need to adapt this to your needs. I decided to let Metabase run with its default H2 Memory Database. You could setup it to use a dedicated database too, at best check the Running Metabase on Docker Guide.

github:5288b63029f350da32a824b52349c90a

Now we create the StatefulSet and the Service which exposes Metabase on TCP Port 32622.

shell:kubectl --namespace=metabase create -f k8s-metabase-stateful-set-and-service.yml

That should all run smoothly and you might have other solutions for this, but I put a NGINX SSL Proxy in front of Metabase. The Header ‘X-Frame-Options: ALLOWALL’ will help us later to embed dashbaords to external pages.

github:3c78e18a0dec61af5298d2af83533a5a

We can now access metabase via https://metabase.k8s.home.mydomain.io and finish the setup wizard.

Installing PostgreSQL Stats Database as k8s Pod

Same as for Metabase we first need a HostPath for our persistent data.

shell:mkdir /opt/k8s-host-path-volumes/metabase-stats-db && chown dockerworker:dockerworker /opt/k8s-host-path-volumes/metabase-stats-db

github:ea066ac9b8af7aa8654496a92d9b94f0

Create the database and expose NodePort 32662 so that we can also use our favorite Database tool to connect to the db.

shell:kubectl --namespace=metabase create -f metabase-stats-db.yml

Now we connect to the database and create our schema and tables. I will only create the currency tables since we will focus on that mainly.

shell:kubectl -n metabase run -it --env="PGPASSWORD=password" --rm --image=postgres:10-alpine --restart=Never psql -- psql -h metabase-stats-db -U postgres

We need to create a dedicated user for metabase to query the stats db too. Therefore paste this SQL statements.

github:2830ee2d0e42d973954d87e4f338a07a

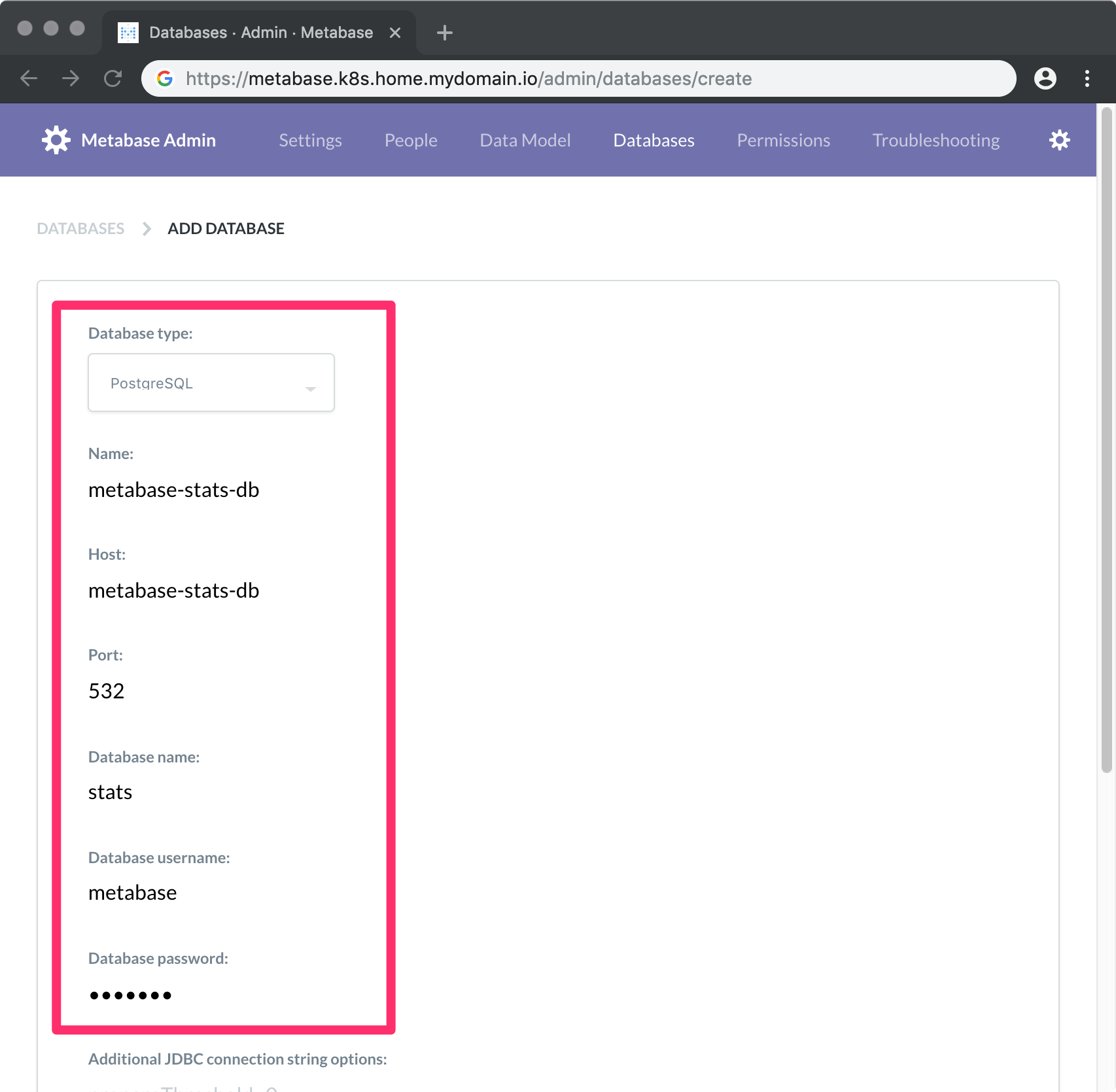

Connecting Metabase to Stats Database

We can now go to the Metabase admin and connect our stats Database like so:

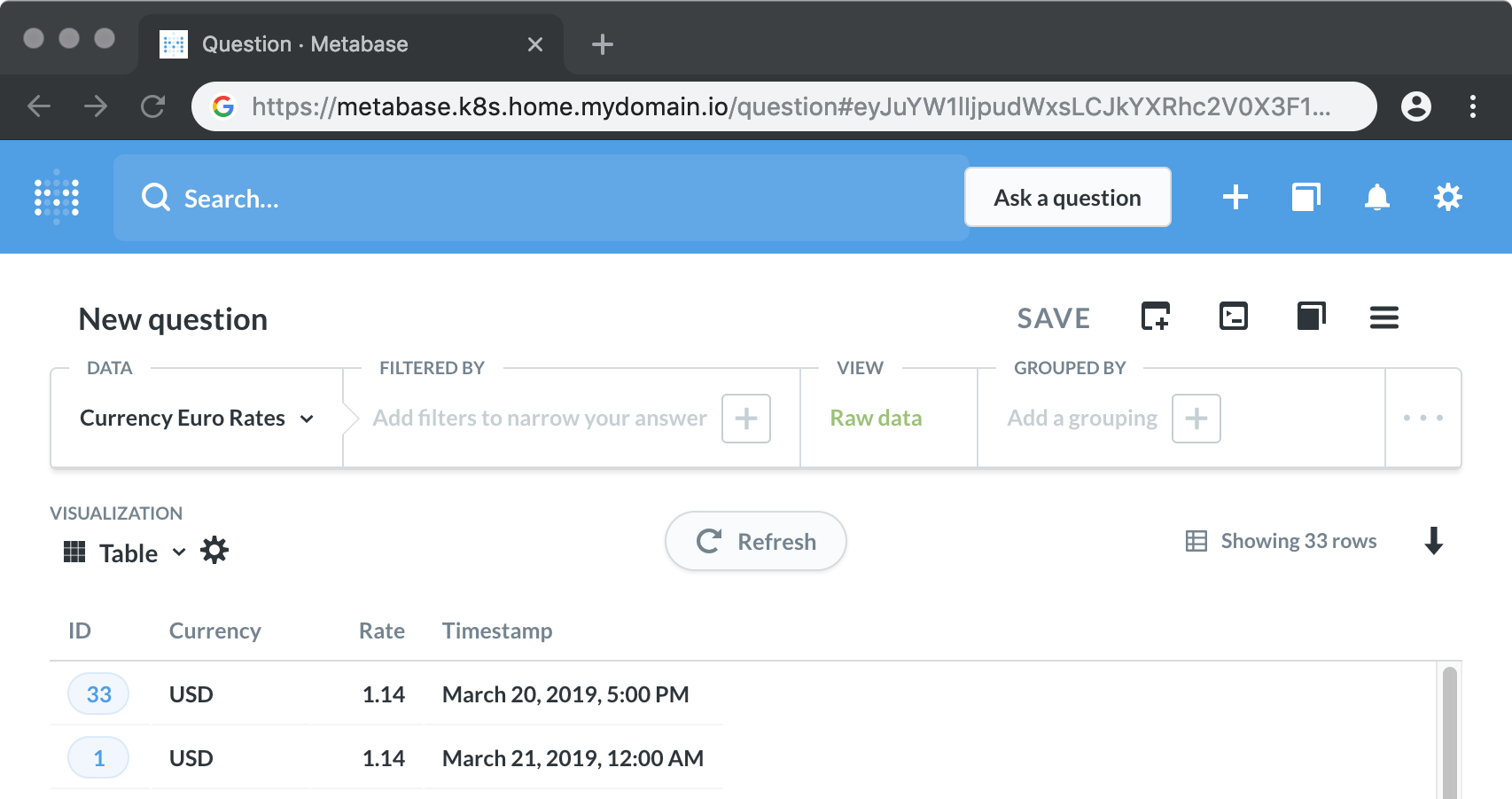

Right after it will take some time for Metabase to scan the schema and then we can “ask our first question”. Simply asking for ‘Currency Euro Rates’ will give us a simple table view.

Metabase Questions for today’s exchange rate USD-EUR

Ok now comes the cool part about Metabase. As long as you have your data in a kind of “time series” data format stored in the database we can work with that.

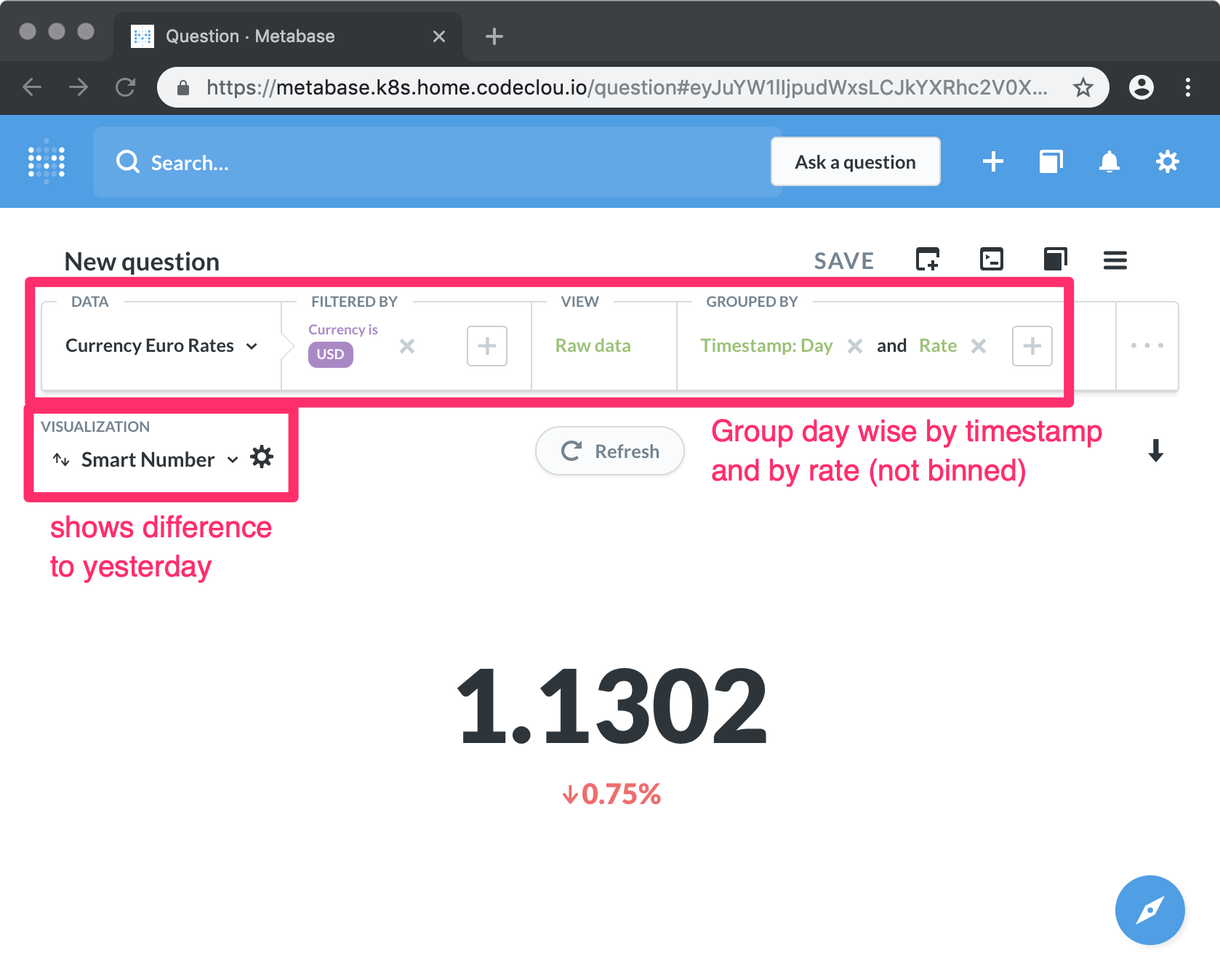

To display the today’s rate for EUR to USD we can simply create a Question and visualize it as Number. We Filter by USD and use Number as visualization. Metabase is smart and will use the most latest row.

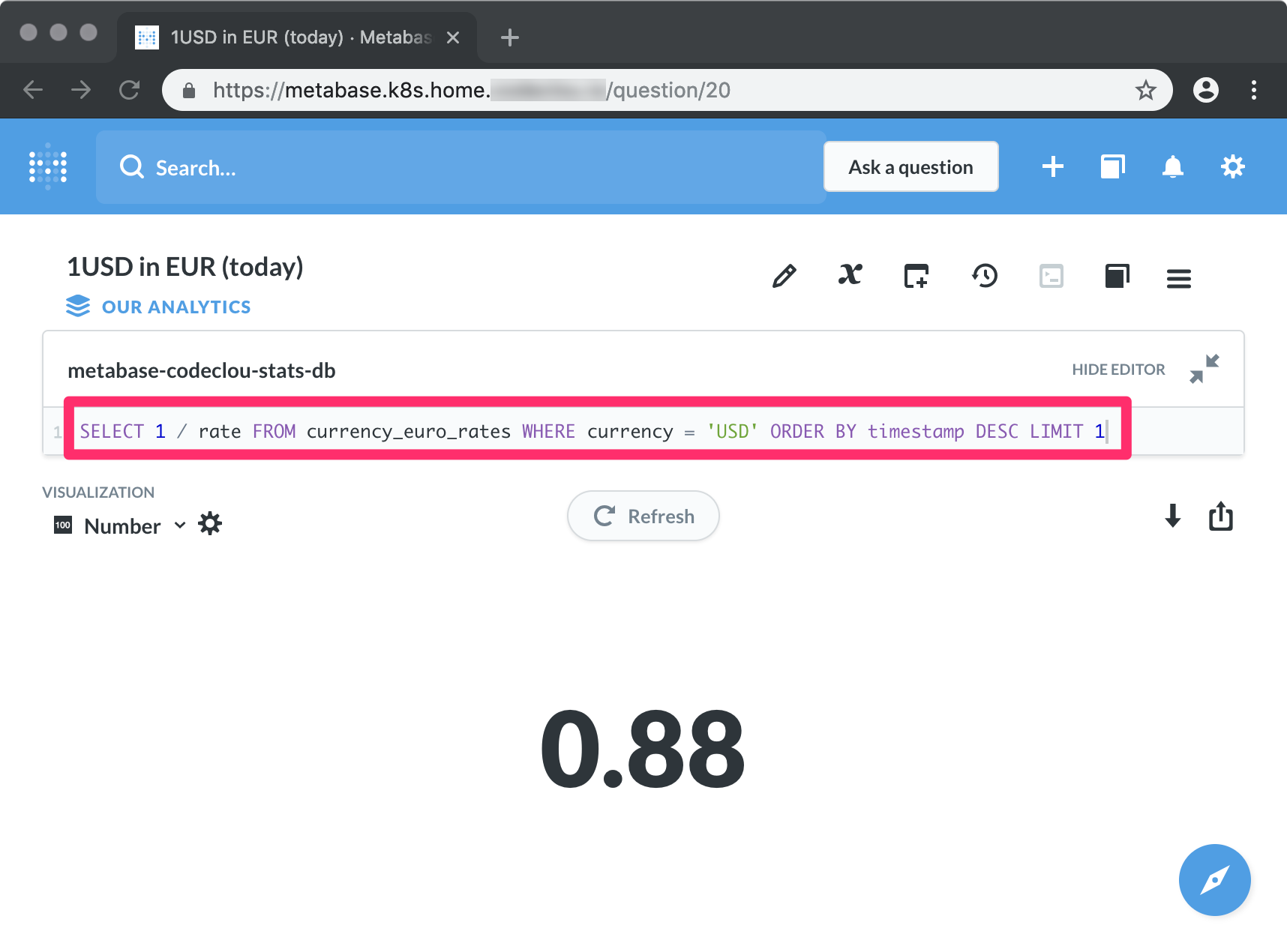

Since we also want to know the USD to EUR rate and me being to dumb to do it via the visual editor, I had to resort to using a native SQL querywhich works also very nice.

Metabase Dashbaord and Embeding

Embedding needs to be enabled by an Admin fist and should only be done if you can assure security of your data. I am anonymizing any data before writing it to the stats db anyway - GDPR compliance is given.

The demo shows how to create a Dashboard, add our KPI Widgets and copy the Public Embed code which you can put on any website now (you should consult some security personal first).

Serverless Sync Scripts deployed via fission.io

If you thought that was cool until now - then grab on to your desk - maximum warp!

First of all I was looking around for nice JSON REST APIs to get the USD to EUR exchange rates. But all of these portals - not naming any of them - want my personal data when signing up for an API key. Not acceptable for me.

The only thing that seems to be free to use is the data of the European Central Bank providing a daily XML file with exchange rates. And who can you trust anyway if not the ECB.

So the XML they provide looks like this. And after taking a bath in all that oldschool SOAPY memories we can go on …

github:f7a73efb871d8fc6c9c62c7d8df69adf

After installing fission in our Kubernetes Cluster (which worked almost out of the box - just had to fix the PersistentVolumeClaims to bind to my Filesystem PersistentVolumes) we had to install the commandline fission client.

And now we can do fancy stuff like

github:6e525eecdd48642106ea94827c86b733

Deploy the serverless code via fission cli like so:

shell:fission function create --name sync-currencies --env nodejs --code sync-currencies.js

Execute the code manually like so:

shell:fission fn test --name sync-currencies

And set a cronjob trigger to run it daily like so:

shell:fission tt create --name sync-currencies --function sync-currencies --cron "0 1 * * *"

Awesome! Is it really that simple …… wait what is this ….. NPM DEPENDENCIES …. Ok It is still awesome with dependencies, but a little less fun.

Lets install the needed dependencies to write our sync code. We need an xml-parser, postgres client and date libs.

shell:yarn add axios fast-xml-parser date-fns he pg

Here is the code to fetch the XML and parse it to a nice JSON like structure.

github:996167eea65d2e00e48938115aa7089b

Now you can put all this together and write some postgres code to insert the data. If you are interested in fission deployments with dependencies read this guide.

Conclusion

Just having started using fission and metabase instantly lead to awesome results. I will surely provide a followup blog post once I learned more fancy stuff. Dockerizing all the things is getting simpler and simpler :) Fission is making my life super easy. Before using fission I would have needed Jenkins jobs, building docker images, pushing them to a Docker Repository, triggering a pod to be installed with that image. Now it is just ‘fission function update’ and that’s it.

I have already started to put more data into Metabase like open tickets or response times from Jira Service Desk. So there will always be data to visualize and to learn from.

Just write us via the contact form if I should highlight any specific part on this in more detail.